Disaggregated RAID storage in modern datacenters offers a game-changing approach to storage architecture. This technology allows for the separation of storage and compute resources, providing unparalleled flexibility and scalability.

By decoupling storage from compute, organizations can create a more agile and responsive infrastructure that can quickly adapt to changing workloads. This is particularly useful in environments where storage needs are unpredictable or constantly evolving.

Disaggregated RAID storage can be implemented using a variety of architectures, including software-defined storage and hyper-converged infrastructure. These solutions enable organizations to mix and match different storage and compute resources to meet specific needs.

Modern datacenters can now support a wide range of workloads, from traditional databases to modern applications like artificial intelligence and machine learning. Disaggregated RAID storage plays a key role in this by providing the necessary storage and compute resources for these workloads.

Intriguing read: Azure Raid

What is RAID Storage

RAID storage is a technology that helps protect your data by duplicating it across multiple disks. This way, if one disk fails, you still have access to your data on the other disks.

Traditional RAID configurations tightly couple storage devices with specific servers, limiting flexibility. Disaggregated RAID storage, on the other hand, allows for a more flexible allocation of storage resources across multiple servers.

Disaggregated RAID storage separates the compute and storage resources in a datacenter, enabling increased utilization and efficiency. This is achieved by pooling storage resources and making them accessible over a network.

Storage devices are pooled and made accessible through a centralized management platform, which can direct storage requests from various compute nodes. This platform is essential for monitoring and managing the storage environment.

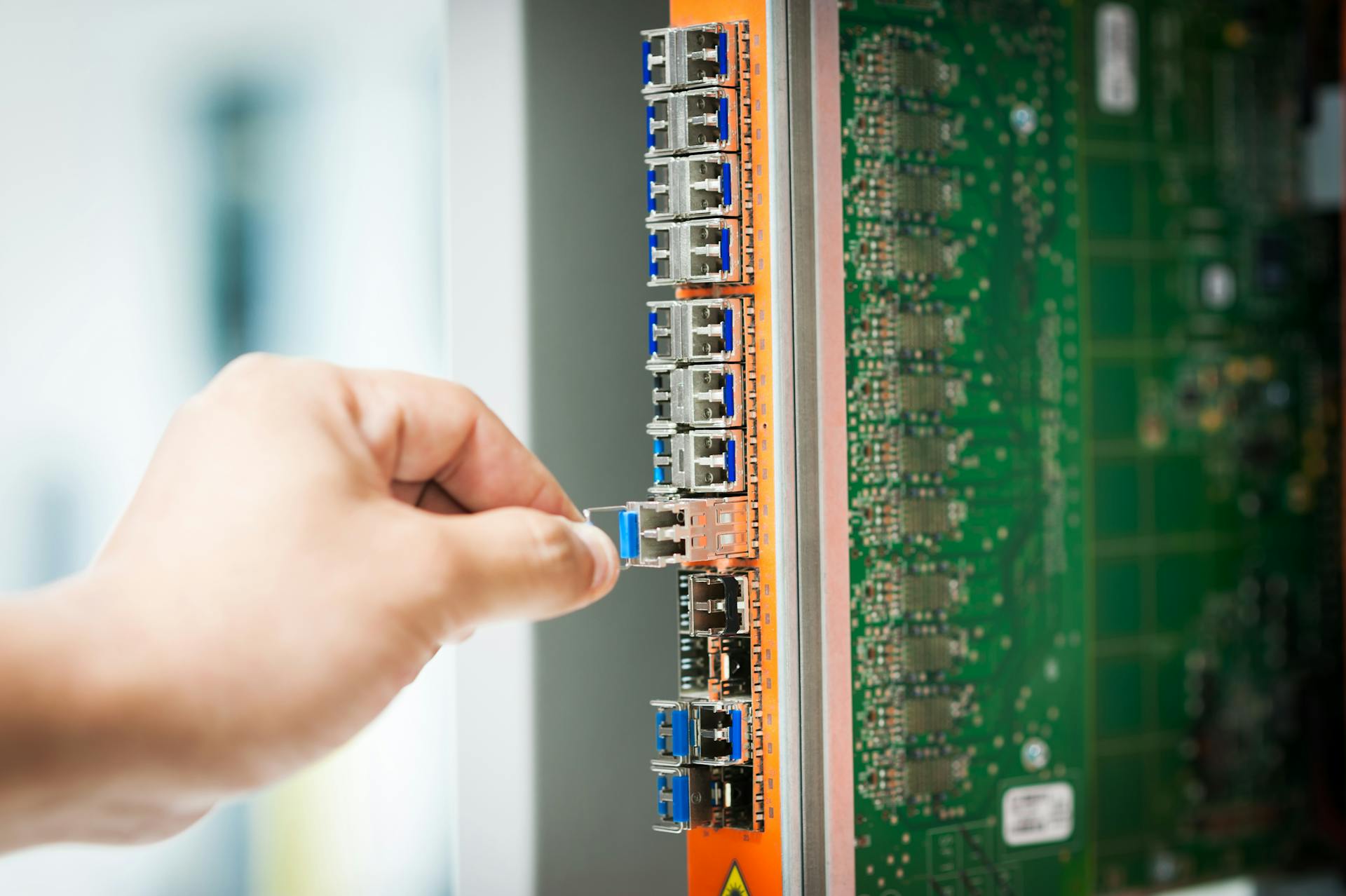

Here's a simplified overview of the components involved in a disaggregated RAID storage setup:

Benefits of RAID Storage

RAID storage offers several benefits that make it a popular choice for modern datacenters. It can improve data availability and reduce the risk of data loss by duplicating data across multiple drives.

RAID 6, for example, can withstand two drive failures at once, ensuring that data remains accessible even in the event of a disaster.

You might like: Raid Event Monitor

With RAID storage, you can also significantly boost data performance by striping data across multiple drives, which can lead to a substantial increase in read and write speeds.

Striping, in particular, can improve sequential read performance by up to 5 times, making it ideal for applications that require high-speed data access.

RAID storage also provides a high level of data protection, thanks to its ability to detect and correct errors in real-time.

In a RAID 5 configuration, for instance, parity information is distributed across all drives, allowing for the detection and correction of single-drive failures.

By using RAID storage, you can also simplify data management and reduce the administrative burden associated with managing multiple storage devices.

Disaggregated RAID Storage

Disaggregated RAID storage is a game-changer in modern datacenters, allowing for a more flexible allocation of storage resources across multiple servers.

This approach separates compute and storage resources, enabling organizations to scale their storage independently of compute resources. Disaggregated RAID storage allows for better resource allocation, minimizing waste by allocating more storage to workloads that require it and less to those that do not.

Scalability is a significant advantage of disaggregated RAID storage, as organizations can easily add more storage without provisioning additional servers. Resource optimization is also improved, with better allocation of storage resources leading to reduced waste.

Fault isolation is another benefit, as decoupling storage from compute resources allows issues in one area to be contained without affecting the overall system. This leads to increased reliability and reduced downtime.

Disaggregated RAID storage can be implemented in a datacenter by setting up network connectivity and storage devices that support disaggregated architectures. Storage devices are then pooled and made accessible through a centralized management platform, which directs storage requests from various compute nodes.

Here are the key steps to implement disaggregated RAID storage:

- Infrastructure Setup: Equip the datacenter with network connectivity and storage devices that support disaggregated architectures.

- Storage Pooling: Pool storage devices and make them accessible through a centralized management platform.

- RAID Configuration: Apply RAID configurations to improve data redundancy and performance across the network.

- Monitoring and Management: Continuously monitor the storage environment to ensure optimal performance and availability.

By following these steps, organizations can reap the benefits of disaggregated RAID storage and improve their datacenter's efficiency, scalability, and reliability.

Challenges and Considerations

Disaggregated RAID storage offers numerous benefits, but it's not without its challenges. Network latency can be an issue due to storage resources being accessed over the network.

Discover more: Edge Datacenter

Organizations need to ensure robust network performance to mitigate this. This is crucial for maintaining data center uptime and efficiency.

Managing a disaggregated storage environment can be more complex than traditional configurations. Proper tools and training are required to navigate these systems effectively.

Not all applications may be compatible with disaggregated storage solutions, which could limit deployment options. This is something to consider when evaluating the suitability of this technology for your data center needs.

Software-Defined Storage

Software-Defined Storage is a game-changer for modern datacenters. It allows for greater flexibility and scalability, making it an ideal solution for organizations looking to optimize their storage resources.

Disaggregated RAID storage, in particular, is a type of software-defined storage that separates compute and storage resources. This decoupling enables a more flexible allocation of storage resources across multiple servers, increasing utilization and efficiency.

By implementing software-defined storage, organizations can scale their storage independently of compute resources. If more storage is needed, it can be added without the need to provision additional servers.

Disaggregated RAID storage offers several advantages over traditional storage systems, including scalability, resource optimization, fault isolation, and cost efficiency. Here are some key benefits:

- Scalability: Organizations can easily scale their storage independently of compute resources.

- Resource Optimization: Disaggregated systems allow for better resource allocation, minimizing waste.

- Fault Isolation: Issues in one area can be contained without affecting the overall system, leading to increased reliability.

- Cost Efficiency: Disaggregated systems allow organizations to avoid over-provisioning compute resources just to meet storage needs.

To implement software-defined storage in a datacenter, several steps are required. These include setting up the necessary infrastructure, pooling storage devices, configuring RAID, and monitoring and managing the storage environment.

For example, Lightbits outperformed Ceph with identical hardware in a recent benchmarking test. Lightbits offered 16x better performance for 4KB writes, common for databases, and 2-16x better performance across read and write performance.

NVMe/TCP and Networking

NVMe/TCP extends NVMe across the entire data center using simple and efficient TCP/IP fabric.

The industry has widely accepted that NVMe over TCP (NVMe/TCP) will provide high performance with reduced deployment costs and design complexity.

NVMe/TCP is the most powerful NVMe-oF technology, making it a key component in disaggregated storage in cloud infrastructure.

High Performance, Low Latency

NVMe/TCP solutions deliver exceptional performance and consistent low latency, making them ideal for database and analytics workloads.

With capabilities such as 4.7M IOPS and 22GB/s throughput, these solutions can handle demanding workloads with ease.

Low latency of 160μs is a significant improvement, reducing tail latency and enabling faster data processing.

This high I/O performance is particularly beneficial for container-based workloads, which require rapid data access and processing.

NVMe/TCP Extends NVMe Across the Data Center

NVMe/TCP extends NVMe across the entire data center using simple and efficient TCP/IP fabric. This makes it a powerful NVMe-oF technology.

NVMe was designed for high-performance direct-attached PCIe SSDs. Later, it was expanded with NVMe over Fabrics (NVMe-oF) to support a hyperscale remote pool of SSDs.

The industry has widely accepted that NVMe-oF will replace Direct Attached Storage (DAS) and become the default protocol for disaggregated storage in cloud infrastructure.

Solution Overview

Our solution for disaggregated RAID storage in modern datacenters is built on a high-performance storage engine that integrates with well-known filesystem services. This integration enables us to deploy software-defined RAID across various environments, including bare-metal, virtual machines, and DPUs.

We focus on three key areas: software-defined RAID, tuned filesystem and optimized server configuration, and RDMA-based interfaces for data access. These components work together to provide high-speed data access required for AI workloads.

Our approach involves deploying different elements of the file storage system on-demand across the necessary hosts. This is achieved through the combination of disaggregated storage resources into virtual RAID using the xiRAID Opus RAID engine, which requires minimal CPU cores.

Volumes are then created and exported to virtual machines via VHOST controllers or NVMe-oF, offering flexible and scalable storage solutions.

Here are the key benefits of our solution:

- Software-defined RAID: deployable across various environments

- Tuned Filesystem (FS) and Optimized Server Configuration: ensures optimal performance and efficiency

- RDMA-based Interfaces for Data Access: provides high-speed data access required for AI workloads

Testing Results

In performance comparisons, mdraid shows significant performance losses, up to 50%, whereas xiRAID Opus maximizes interface read performance.

xiRAID Opus achieves approximately 60% of the interface's potential for writes with one or two clients, highlighting its superior performance in virtualized environments.

Sequential operations with mdraid result in decreased performance, but xiRAID Opus scales effectively, reaching up to 850k-950k IOPS with two clients.

In contrast, mdraid-based solutions fail to scale effectively, capping small block read performance at 200-250k IOPS per VM due to kernel limitations.

xiRAID Opus nearly approaches 1 million IOPS after connecting three clients, further demonstrating its scalability and efficiency.

MDRAID + NFS virtual machine utilizes nearly 100% of a quarter of the CPU cores.

xiRAID Opus + NFS virtual machine uses only two fully loaded CPU cores, with around 12% of the remaining cores operating at approximately 25% load.

Sources

- https://www.bodhia.ca/post/disaggregated-raid-storage-in-modern-datacenters

- https://dl.acm.org/doi/10.1145/3582016.3582027

- https://www.lightbitslabs.com/product/

- https://www.semanticscholar.org/paper/Gimbal%3A-enabling-multi-tenant-storage-on-SmartNIC-Min-Liu/6f203e3a3af9a637f62e1d2c6b7fb37583a22507

- https://dev.to/pltnvs/virtualized-nfsordma-based-disaggregated-storage-solution-for-ai-workloads-2im

Featured Images: pexels.com