The Gemini 1.5 Pro 2M Context Window on Google Cloud Platform is a game-changer for AI enthusiasts and professionals alike. This powerful tool unlocks new possibilities for natural language processing and machine learning.

With the Gemini 1.5 Pro 2M Context Window, you can process and analyze vast amounts of data in real-time, making it an ideal solution for applications that require high-speed processing and large context windows.

Worth a look: Google Pixel 7 Pro Storage

Key Features

Gemini 1.5 Pro has a long context window of up to two million tokens, the longest of any large-scale foundation model.

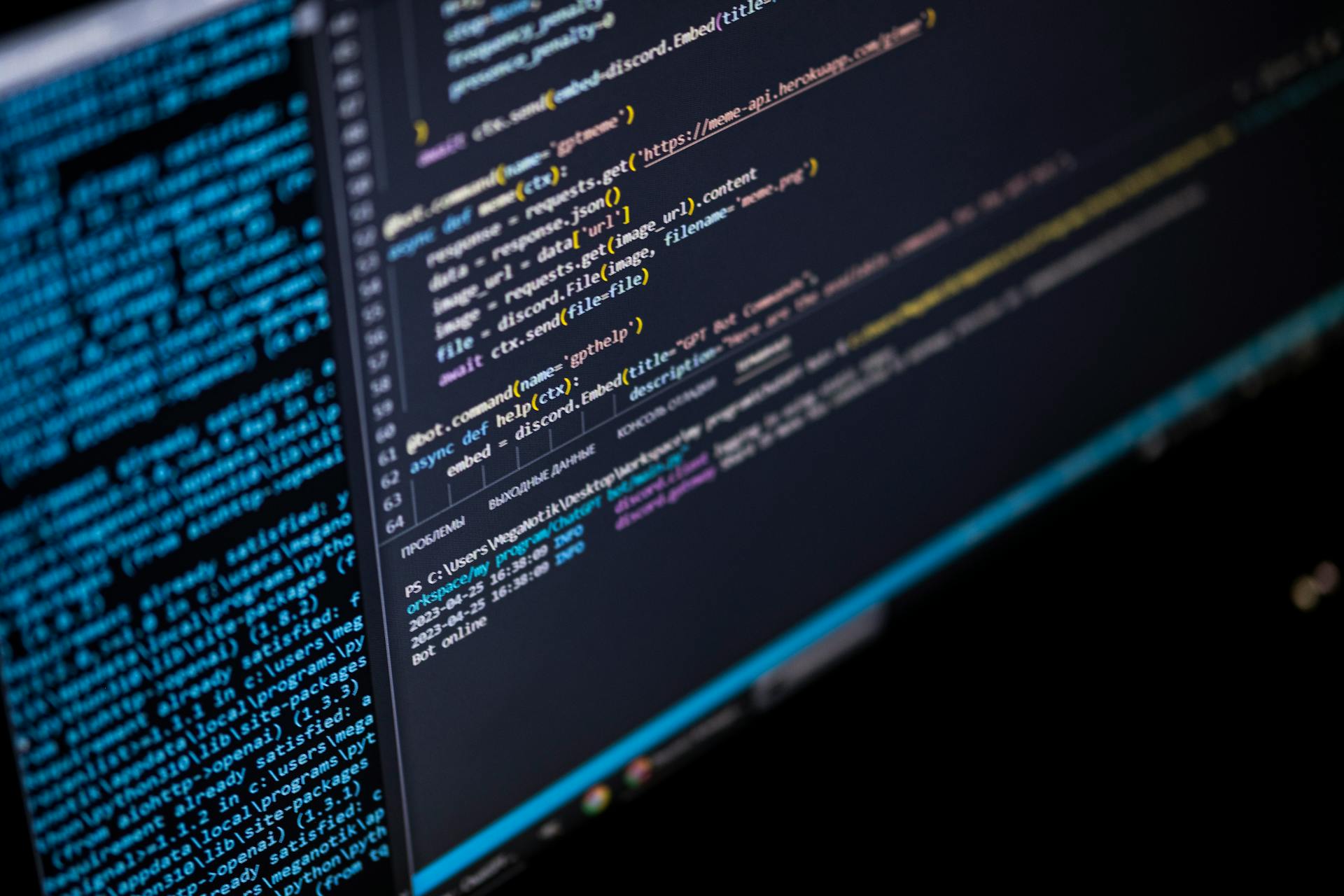

This extensive capacity enables Gemini to analyze extensive codebases, handling over 100,000 lines in a single session.

Gemini 1.5 Pro is designed for common coding tasks like generation, analysis, and optimization.

Developers can process more than 100,000 lines of code, suggest helpful modifications, and explain how different parts of the code work with Gemini 1.5 Pro.

The model can be dynamically leveraged to generate and run Python code and learn iteratively from the results until it gets to a desired final output.

Intriguing read: Google Analytics Code for Website

Gemini 1.5 Pro is natively multimodal, providing developers with detailed insights and explanations for large or complex codebases.

The execution sandbox is not connected to the internet, comes standard with a few numerical libraries, and developers are simply billed based on the output tokens from the model.

Developers using GitHub Copilot will be able to select Gemini 1.5 Pro during conversations with GitHub Copilot Chat, Visual Studio Code, and with Copilot extensions for Visual Studio.

Intriguing read: Mac Remove Google Drive

Frequently Asked Questions

What is the context limit of Gemini 1.5 Pro?

Gemini 1.5 Pro has a 2-million-token context window, allowing for more in-depth conversations. This larger context limit enables more complex and nuanced interactions.

What is the context size of Gemini Pro API?

The Gemini Pro API has a default context size of 128k, with some users having access to 1M. Context sizes up to 10M have been internally tested by Google, but are not currently available.

What is the Gemini 1.5 Pro good for?

The Gemini 1.5 Pro is ideal for AI applications that require understanding and responding to lengthy inputs, such as complex conversations or in-depth text analysis. Its large context window enables it to process and retain vast amounts of information, making it a powerful tool for various NLP tasks.

Sources

- https://developers.googleblog.com/en/new-features-for-the-gemini-api-and-google-ai-studio/

- https://www.infoq.com/news/2024/11/gemini-github-copilot/

- https://www.infoworld.com/article/2510442/google-opens-access-to-2-million-context-window-of-gemini-1-5-pro.html

- https://venturebeat.com/ai/google-gemini-1-5-flash-rapid-multimodal-model-announced/

- https://www.cnet.com/tech/services-and-software/googles-gemini-1-5-pro-will-have-2-million-tokens-heres-what-that-means/

Featured Images: pexels.com