A scalable internet data center is a must-have for businesses that require high-performance computing and storage. According to section 3, "Designing the Data Center Infrastructure", a scalable data center can handle increasing demand with minimal downtime and cost.

The key to building a scalable data center is to use AI-powered automation tools. These tools can monitor and adjust the data center's infrastructure in real-time, ensuring that resources are allocated efficiently. As mentioned in section 5, "Implementing AI-Powered Automation", AI can predict and prevent equipment failures, reducing maintenance costs and improving overall efficiency.

To further enhance scalability, businesses can use cloud-based services that offer on-demand computing resources. Section 2, "Choosing the Right Cloud Services", highlights the benefits of cloud computing, including reduced capital expenditures and increased flexibility. By leveraging cloud services, businesses can quickly scale up or down to meet changing demands without incurring significant costs.

Innovation and Efficiency

Juniper's data center solutions are the fastest and most flexible way to deploy high-performing networks, and the simplest to operate and automate for teams of any size.

Juniper Apstra delivers reliable intent-based automation and operational excellence throughout the entire life cycle, without vendor lock-in. This means businesses can focus on innovation, not just maintaining their infrastructure.

Juniper's switching, routing, and security platforms deliver a scalable data center foundation with resilient, automated fabrics and built-in threat prevention. This ensures a secure and reliable network, even as data center operations become increasingly complex.

Data centers are adopting various energy efficiency measures to reduce their carbon footprint. Some of these measures include modernizing cooling systems, adopting free cooling, and recovering and reusing excess heat to warm nearby households and offices.

Here are some key energy efficiency measures data centers are adopting:

- Modernizing cooling systems.

- Adopting the free cooling.

- IT rooms’ isolation with a cold aisle/hot aisle configuration.

- Recovering and reusing data centers’ excess heat.

- Using Artificial Intelligence for predicting power usage and optimizing cooling systems.

- Prioritizing the use of 100% clean and renewable energies.

By embracing innovation and efficiency, data centers can reduce their environmental impact and improve their overall performance.

Scale Innovation with AI

Juniper's data center solutions are the fastest and most flexible way to deploy high-performing networks. They simplify operations and automation for teams of any size.

Businesses require fast and reliable on-premises data center networks that perform like the cloud. However, as data center network operations become increasingly complex, skilled IT resources are in short supply.

Juniper Apstra delivers reliable intent-based automation and operational excellence throughout the entire life cycle. It works over a wide range of topologies and switches without vendor lock-in.

Juniper's switching, routing, and security platforms deliver a scalable data center foundation with resilient, automated fabrics and built-in threat prevention. This ensures Zero-Trust data center architectures that safeguard users, applications, data, and infrastructure.

Juniper's AI-optimized data center fabrics provide advanced traffic management capabilities, ensuring high bandwidth, low latency, lossless, and scalable performance over Ethernet. They reduce costs, accelerate innovation, and help avoid vendor lock-in.

Juniper Validated Designs offer confidence and speed, giving you up to 10x better reliability. This makes it easier to deploy high-performing AI training, inference, and storage clusters with speed and flexibility.

Explore further: Connections - Oracle Fusion Cloud Applications

Efficiency Measures

Efficiency Measures are a crucial aspect of innovation in the data center industry. Modernizing cooling systems is a key measure, allowing data centers to reduce their energy consumption and carbon footprint.

Data centers are adopting various energy efficiency measures to reduce their environmental impact. The utilization of physical hardware is more optimized in large-scale data centers than in traditional on-premise data centers.

Adopting free cooling is another measure being implemented by data centers. This involves using natural cooling methods, such as outside air, to cool the data center instead of relying on mechanical systems.

IT rooms' isolation with a cold aisle/hot aisle configuration is also becoming more common. This layout helps to reduce heat transfer and improve cooling efficiency.

Recovering and reusing data centers' excess heat to warm nearby households, offices, and schools is a creative way to reduce waste. This approach not only saves energy but also provides a valuable resource to the community.

Discover more: Azure Data Manager for Energy

Using Artificial Intelligence (AI) is another innovative approach to improving energy efficiency in data centers. AI can predict power usage, optimize cooling systems, and improve the Power Usage Effectiveness (PUE) ratio.

Prioritizing the use of 100% clean and renewable energies is also a key strategy for reducing a data center's carbon footprint. This not only benefits the environment but also helps to reduce energy costs in the long run.

Here are some of the energy efficiency measures data centers are adopting:

- Modernizing cooling systems.

- Adopting the free cooling.

- IT rooms' isolation with a cold aisle/hot aisle configuration.

- Recovering and reusing data centers' excess heat.

- Using Artificial Intelligence for predicting power usage.

- Prioritizing the use of 100% clean and renewable energies.

Unified Zero Trust Security

Unified Zero Trust Security is a game-changer for protecting your data center. Juniper's Validated Designs (JVDs) assure a complete data center solution, from switching and routing to operations and security.

Juniper's broad portfolio of switches drives scale and performance, and a comprehensive DC security portfolio with unified threat prevention delivers unified Zero-Trust data center protection. This safeguards users, applications, data, and infrastructure across all network connection points.

For your interest: Why Is Data Security Important

Analysys Mason researched the key motivations and challenges for data center automation. One key finding: DIY network automation often doesn’t improve productivity.

Juniper's automated secure data center unites multivendor automation and assured reliability of intent-based networking software with scalable and programmable switching and routing platforms.

Juniper Data Center Security protects your distributed centers of data by operationalizing security and extending Zero Trust across networks. This prevents threats with proven efficacy, safeguarding users, data, and infrastructure across hybrid environments.

Here's a breakdown of the benefits of Juniper's Unified Zero Trust Security:

- 10x faster deployment of new services

- Unified management and context-driven network-wide visibility

- A single policy framework for security

Juniper Solutions

Juniper Solutions have developed a range of network security products that are designed to protect data centers from cyber threats.

Their SRX line of firewalls is a key component of their security solutions, providing advanced threat protection and security management capabilities.

The SRX firewalls are designed to be highly scalable and can be easily integrated with other Juniper products to provide a comprehensive security solution.

For another approach, see: Azure Data Security

Juniper's Network Director is a management platform that provides visibility and control over the entire network, including data centers.

It allows administrators to monitor and manage network traffic, identify security threats, and take corrective action in real-time.

Juniper's data center solutions also include their QFX line of switches, which provide high-speed connectivity and low latency for data center networks.

These switches are designed to be highly reliable and can operate in a wide range of environments, from small to large data centers.

Juniper's data center solutions are designed to provide a secure, scalable, and reliable infrastructure for businesses of all sizes.

Take a look at this: Network Edge Datacenters

Networking and Infrastructure

Google's data center network is a marvel of scalability and fault tolerance, with a capacity that scales in the terabit per second range. The network starts at the rack level, where custom-made 19-inch racks contain 40 to 80 servers connected via 1 Gbit/s Ethernet links to the top of rack switch (TOR).

Expand your knowledge: Network Storage Internet Speed

The TOR switches are then connected to a gigabit cluster switch using multiple gigabit or ten gigabit uplinks, forming the datacenter interconnect fabric. Google has adopted various modified Clos topologies to support fault tolerance and accommodate low-radix switches.

Google's network is highly distributed, with 67 public exchange points and 69 different locations across the world, providing direct connections to as many ISPs as possible at the lowest possible cost. This public network is used to distribute content to Google users as well as to crawl the internet to build its search indexes.

Recommended read: Managed Network Services

Juniper Apstra

Juniper Apstra offers intent-based networking software that automates the entire network life cycle from design through everyday operations.

This software continuously validates network performance, providing powerful analytics and root cause identification to ensure reliability.

Juniper Apstra Cloud Services take this a step further by bringing AI-driven operations to the data center, expanding data center assurance from network to application assurance.

These cloud-based services address a range of data center operations challenges, making it easier to manage complex networks.

With Juniper Apstra, you can enjoy continuous validation, powerful analytics, and root cause identification, all designed to assure reliability and simplify network management.

By automating the network life cycle, Juniper Apstra saves time and reduces the risk of human error, making it a valuable tool for any organization.

Networking

Google's network is a behemoth, with direct connections to 67 public exchange points and 69 different locations across the world.

To run such a large network, Google has a very open peering policy, allowing it to access the internet at the lowest possible cost.

Google's private network is a secret, but we do know it uses custom-built high-radix switch-routers with a capacity of 128 × 10 Gigabit Ethernet ports.

These routers are connected to DWDM devices to interconnect data centers and point of presences via dark fiber.

Google's data center network topology has been designed to support fault tolerance, increase scale, and accommodate low-radix switches.

Google has adopted various modified Clos topologies to achieve this.

The Google network is used to distribute content to Google users as well as to crawl the internet to build its search indexes.

Google's network is a key component of its content delivery network, which is one of the largest and most complex in the world.

Google's network starts at the rack level, where 19-inch racks are custom-made and contain 40 to 80 servers.

Servers are connected via a 1 Gbit/s Ethernet link to the top of rack switch (TOR).

The TOR switches are then connected to a gigabit cluster switch using multiple gigabit or ten gigabit uplinks.

Google's network is designed to scale in the terabit per second range, with two fully loaded routers providing a bi-sectional bandwidth of 1,280 Gbit/s.

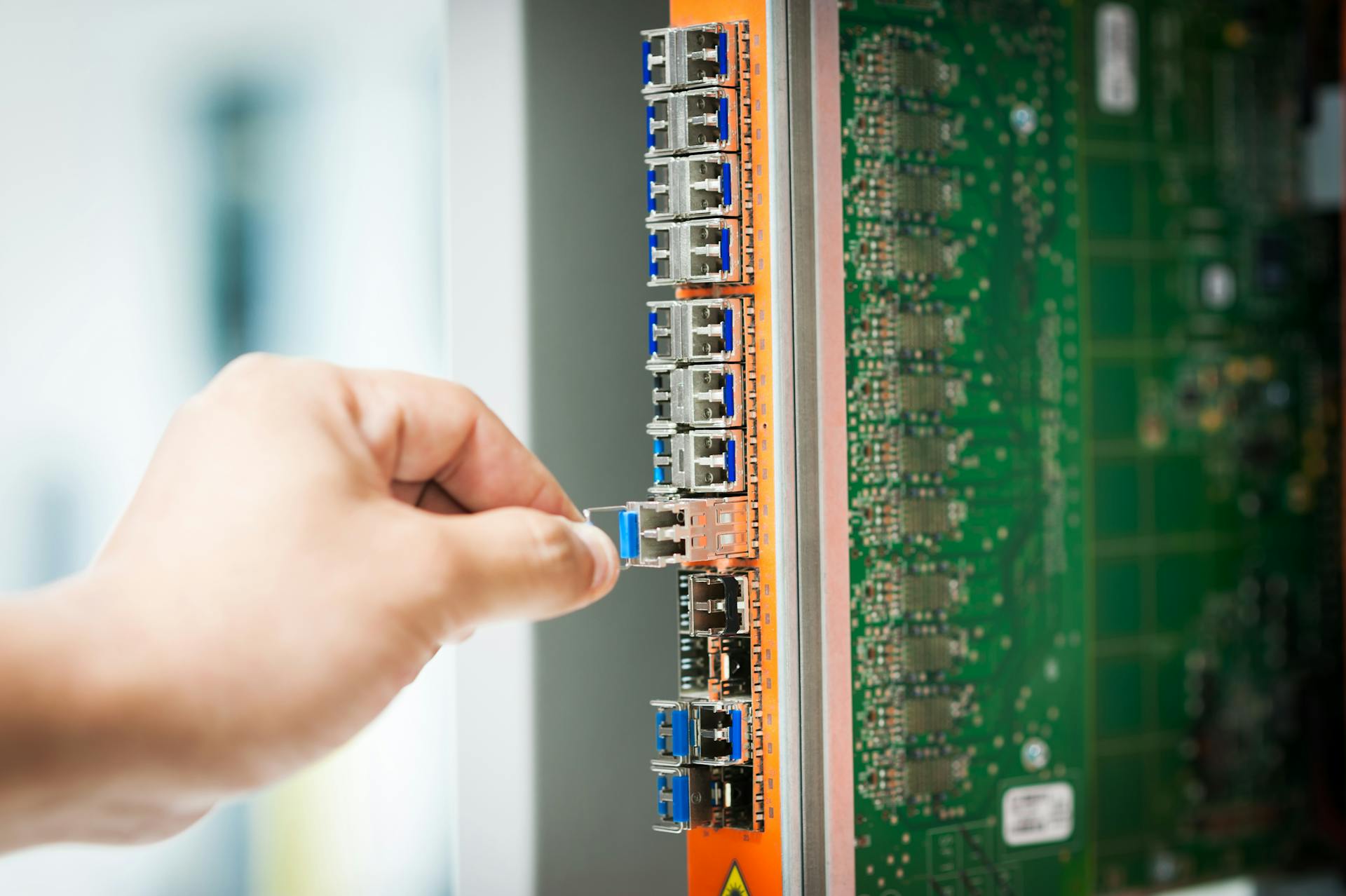

Optics

Juniper offers a complete portfolio of standards-compliant optics that delivers leading performance and operational simplicity for deployments across WAN, data center, and enterprise networks.

Their optics portfolio includes direct-detect and coherent optical transceivers that provide high-speed data transmission.

Application-specific pluggables are also part of their portfolio, allowing for customized solutions that meet specific network requirements.

Optical and electrical cables are included in their offerings, providing a comprehensive range of connectivity options.

This broad portfolio enables network administrators to choose the right optics for their specific needs, ensuring optimal network performance and efficiency.

Intriguing read: Portfolio Google Drive

Server Types

Google's server infrastructure is divided into several types, each assigned to a different purpose. These types include web servers, data-gathering servers, index servers, document servers, ad servers, and spelling servers.

Web servers are responsible for executing queries sent by users and formatting the result into an HTML page. They send queries to index servers, merge the results, compute their rank, retrieve a summary for each hit using the document server, ask for suggestions from the spelling servers, and finally get a list of advertisements from the ad server.

On a similar theme: Google Drive Web Dev

Data-gathering servers are permanently dedicated to spidering the Web, updating the index and document databases, and applying Google's algorithms to assign ranks to pages. Google's web crawler is known as GoogleBot.

Index servers contain a set of index shards and return a list of document IDs ("docid") that correspond to a certain docid containing the query word. These servers need less disk space but suffer the greatest CPU workload.

Document servers store documents, with each document stored on dozens of document servers. When performing a search, a document server returns a summary for the document based on query words and can also fetch the complete document when asked. These servers need more disk space.

Ad servers manage advertisements offered by services like AdWords and AdSense.

Spelling servers make suggestions about the spelling of queries.

The different server types work together to provide a seamless search experience. Here's a breakdown of the main types:

Virtual

Virtual data centers are a game-changer for IT agility and cost reduction. By eliminating many of the challenges of traditional data centers, virtual data centers allow for increased flexibility and security.

Expand your knowledge: Google Cloud Platform Data Centers

A virtual data center is essentially a data center architecture that's completely independent, ensuring maximum security and high-availability. This abstraction of the physical data center using virtualization allows service providers to be more competitive.

Each virtual data center can be hosted on a single, physical data center, making it a cost-effective solution. This setup also enables service providers to provide computing, storage, and networking resources as a service to customers.

Virtual data centers are also known as Private Clouds, Cloud Data Centers, or Software-defined data centers (SSDC). This terminology highlights the flexibility and scalability of virtual data centers.

You might enjoy: Why Are Data Centers Important

Original Hardware

The original hardware used by Google back in the day was quite impressive. Google's first main machine was a Sun Microsystems Ultra II with dual 200 MHz processors and 256 MB of RAM.

This machine was the brain behind the original Backrub system. Google's first search ran on two 300 MHz dual Pentium II servers donated by Intel, which included 512 MB of RAM and 10 x 9 GB hard drives between the two.

The F50 IBM RS/6000, donated by IBM, was another key player in Google's early days. It came with 4 processors, 512 MB of memory, and 8 x 9 GB hard disk drives.

Google also had two additional boxes with 3 x 9 GB hard drives and 6 x 4 GB hard disk drives respectively, which were attached to the Sun Ultra II. These were the original storage for Backrub.

Other notable hardware included an SSD disk expansion box with 8 x 9 GB hard disk drives donated by IBM, and a homemade disk box containing 10 x 9 GB SCSI hard disk drives.

Additional reading: Google Drive Application for Mac

Private Suites

In a data center, companies can rent private suites to host their IT infrastructure. These suites are essentially rooms where servers and storage systems can be housed to process and store a company's data.

Some companies have a cage or a few racks, while others can have a whole private suite to host multiple racks. The size of the space depends on the company's needs.

Suggestion: Hosting in Google Drive

Data centers provide a technical space with a raised floor that's been designed for optimal efficiency. This floor has electrical sockets installed under it to connect the racks, making it easy to manage the infrastructure.

Large-scale data centers implement multiple safety mechanisms to prevent accidents and ensure the smooth operation of the data center. Some of these mechanisms include:

- Redundant power supply systems.

- Diesel backup generators.

- Redundant and very efficient cooling systems.

- Fire detection and extinguishment.

- Water leak detectors and security controls.

How We Do It

We use a combination of hardware and software to manage our network infrastructure, including routers, switches, and firewalls to ensure secure and efficient data transmission.

Our network is designed with scalability in mind, allowing us to easily add or remove devices as needed to meet changing business demands.

We utilize virtual private networks (VPNs) to provide secure remote access to our network for employees working from home or on the go.

This helps to protect sensitive data from unauthorized access and ensures compliance with industry regulations.

Our firewalls are configured to block incoming traffic from unknown sources, reducing the risk of cyber attacks and data breaches.

We also implement regular software updates and patches to ensure our systems are running with the latest security features.

This helps to prevent vulnerabilities that could be exploited by hackers.

Take a look at this: How to Access Preservation Hold Library Onedrive

Frequently Asked Questions

What are internet data centers?

Data centers are physical facilities that house computing equipment, servers, and storage devices, storing a company's digital data. They provide the infrastructure needed to support IT systems and keep data safe and accessible.

How many internet data centers are there?

As of December 2023, there are approximately 10,978 data center locations worldwide, according to Cloudscene, Datacentermap, and Statista. This massive number highlights the growing demand for data storage and computing power.

What are the three types of data centers?

There are three main types of data centers: enterprise data centers, colocation data centers, and cloud data centers, each serving different purposes and user needs. Understanding the differences between these types can help you choose the best solution for your business or organization.

Featured Images: pexels.com