Getting started with Azure Integration Account is a straightforward process that requires minimal setup.

First, you need to create an Integration Account, which can be done through the Azure portal. This is the central hub for managing your integration services.

To create an Integration Account, you'll need to provide a name and a resource group. The name should be unique and descriptive, while the resource group should be organized in a way that makes sense for your organization.

The Integration Account is where you'll manage your integration services, including your mappings, pipelines, and triggers.

Discover more: Create Azure Storage Account

Setup and Configuration

To set up a secure connection between your Premium integration account and Azure services, you can create a private endpoint for your integration account. This endpoint is a network interface that uses a private IP address from your Azure virtual network.

To create a private endpoint, you'll need to log into the Azure account you want to integrate with Datadog. Once you're logged in, you can follow the steps to set up a private endpoint.

Suggestion: Create Windows Azure Account

To limit metric collection for Azure-based hosts, you can open the integration tile for Azure and select the Configuration tab. From there, you can open App Registrations and enter a list of tags in the text box under Metric Collection Filters. This list of tags in KEY:VALUE form is separated by commas and defines a filter used while collecting metrics.

Here are the roles that your Premium integration account requires to access your storage account:

Set Up Storage Access

To set up storage access for your Premium integration account, you need to give it permission to read and write to your Azure storage account. This is done through the Azure portal, where you'll need to open your Premium integration account and navigate to the Identity settings.

Your integration account uses its automatically created and enabled system-assigned managed identity to authenticate access.

To grant access, you'll need to add Azure role assignments for the Storage role. This includes the Storage Account Contributor, Storage Blob Data Contributor, and Storage Table Data Contributor roles.

Take a look at this: Azure Data Studio vs Azure Data Explorer

Here are the specific steps to follow:

For more information on the specific roles and permissions required, see the article on Assign Azure role to system-assigned managed identity.

Readers also liked: Azure B

Private Endpoint Setup

Setting up a private endpoint for your Premium integration account is a great way to create a secure connection between your account and Azure services. This setup uses a private IP address from your Azure virtual network, keeping traffic within the Azure backbone network.

A private endpoint is a network interface that eliminates exposure to the public internet, reducing the risks from attacks. By using private endpoints, you can also help your organization meet data privacy and compliance requirements by keeping data within a controlled and secured environment.

To set up a private endpoint, you'll need to carefully plan your virtual network and subnet architecture to accommodate private endpoints. Make sure to properly segment and secure your subnets to ensure a smooth setup process.

A unique perspective: Azure Data Studio Connect to Azure Sql

Here are the benefits of using private endpoints:

- Eliminates exposure to the public internet and reducing the risks from attacks.

- Helps your organization meet data privacy and compliance requirements by keeping data within a controlled and secured environment.

- Reduces latency and improve workflow performance by keeping traffic within the Azure backbone network.

- Removes the need for complex network setups, such as virtual private networks or ExpressRoute.

- Saves on costs by reducing extra network infrastructure and avoiding data egress charges through public endpoints.

To enable Standard logic app calls through private endpoint on Premium integration account, you'll need to include an HTTP action in your Standard logic app workflow where you want to call the integration account.

See what others are reading: Azure Logic Apps vs Functions

Adding XML Validation

To add XML validation to your Logic App, you'll need to trigger it when an HTTP request is received. This is done by adding a request action, which will trigger the Logic App.

You can do this by going to the "Add an action" section and selecting "XML Validation". To filter the actions to the one you want, simply type "xml" in the search box.

Next, you'll need to specify the XML content that you want to validate. This is done by selecting "CONTENT" as the source of the XML. Then, choose the body tag as the content you want to validate.

The XML validation action will now be set up to validate the XML content of the HTTP request.

Related reading: Azure App Insights vs Azure Monitor

Adding Condition

To add a condition to your Logic App, you'll need to click on the "New Step" button and choose "Add an Condition".

You can do this by clicking on the + button next to the action you want to add the condition to. Then, you'll need to enter the condition using the @equals function, like this: @equals(xpath(xml(body(‘Transform_XML’)), ‘string(count(/.))’), ‘1’).

This will check if the count of the XML body is equal to 1. Once you've added the condition, your Logic App should look similar to the configuration shown below.

A fresh viewpoint: Enterprise Integration with Azure Logic Apps Pdf

Import

Import is a crucial step in the setup and configuration process. The type of import you'll need to do depends on the type of data you're working with. For example, if you're importing a new database, you'll need to follow the import database steps outlined earlier.

To import a new database, you'll need to use the import database tool, which can be accessed through the settings menu. This tool allows you to upload your database file and configure the import settings.

Importing a new database can take some time, depending on the size of the file and the speed of your internet connection. Be patient and let the process complete before moving on to the next step.

Data from the existing database will be overwritten during the import process. Make sure to back up any important data before proceeding.

Account Management

Managing your Azure Integration Account is a breeze, thanks to its intuitive interface.

You can access your account settings from the Azure portal, where you can view and edit your account details, including your subscription information and API keys.

The Azure Integration Account allows you to manage multiple connections to different Azure services, such as Azure Storage and Azure Cosmos DB, from a single location.

This makes it easy to monitor and control your data flows across different services, reducing the complexity of managing multiple connections separately.

To add a new connection to your Azure Integration Account, simply click on the "Connections" tab and select the service you want to connect to, such as Azure Blob Storage.

You might enjoy: What Are Linked Services in Azure

Once connected, you can view detailed information about your connections, including their status and any errors that may have occurred.

The Azure Integration Account also provides features like connection throttling and rate limiting, which help prevent overloading and ensure a smooth data flow.

These features are especially useful when working with large datasets or high-volume data flows, where performance and reliability are critical.

Integration with Azure

To integrate your Azure account with Datadog, you can use the Azure CLI or the Azure portal. The Azure CLI is a great option if you're comfortable with the command line, as it allows you to create a service principal and configure its access to Azure resources with just a few commands.

To create a service principal, you'll need to run the `az ad sp create-for-rbac` command, which grants the Service Principal the monitoring reader role for the subscription you want to monitor. You'll also need to create an application as a service principal using the `az ad app create` command, and then configure its access to Azure resources using the `az role assignment create` command.

For more insights, see: Azure Powershell vs Azure Cli

Alternatively, you can use the Azure portal to integrate your Azure account with Datadog. In the Azure integration tile, select Configuration > New App Registration > Using Azure Portal. From there, you can select the region, subscription, and resource group for the template to be deployed, and then create a new app registration and client secret.

Here are the steps to create a new app registration:

Once you've completed these steps, you'll be able to integrate your Azure account with Datadog and start collecting metrics and monitoring your Azure resources.

Private Endpoints Best Practices

To set up private endpoints for your Premium integration account, you'll want to carefully plan your virtual network and subnet architecture to accommodate them. This means properly segmenting and securing your subnets.

Private endpoints eliminate exposure to the public internet and reduce the risks from attacks. They also help your organization meet data privacy and compliance requirements by keeping data within a controlled and secured environment.

To ensure smooth connectivity and performance, thoroughly test your integration account's connectivity and performance with private endpoints before deploying to production. This will help you catch any issues before they become major problems.

Regularly monitoring network traffic to and from your private endpoints is also crucial. This can be done using tools such as Azure Monitor and Azure Security Center, which can help you audit and analyze traffic patterns.

Here are some key benefits of using private endpoints:

- Eliminates exposure to the public internet and reducing the risks from attacks.

- Helps your organization meet data privacy and compliance requirements by keeping data within a controlled and secured environment.

- Reduces latency and improve workflow performance by keeping traffic within the Azure backbone network.

- Removes the need for complex network setups, such as virtual private networks or ExpressRoute.

- Saves on costs by reducing extra network infrastructure and avoiding data egress charges through public endpoints.

By following these best practices, you can ensure a secure and efficient connection between your Premium integration account and Azure services.

Portal Integration

To integrate your integration account with your logic app, you need to link them in the Azure portal. This can be done by selecting your integration account in the Integration account list under Workflow settings in your logic app's settings.

Make sure both resources use the same Azure subscription and Azure region for successful linking. You can find your integration account's callback URL in the Azure portal by searching for integration accounts, selecting your account, and navigating to the Callback URL settings.

A different take: Logic Apps in Azure

To link your integration account to your logic app, open your logic app resource, navigate to Workflow settings, and select your integration account from the Select an Integration account list.

Here are the steps to follow:

- In the Azure portal, open your logic app resource.

- On your logic app's navigation menu, under Settings, select Workflow settings.

- Under Integration account, open the Select an Integration account list, and select the integration account you want.

- To finish linking, select Save.

Once your integration account is successfully linked, Azure will show a confirmation message. This will allow your logic app workflow to use the artifacts in your integration account, plus B2B connectors like XML validation and flat file encoding or decoding.

Agent Installation

To install the Datadog Agent on your Azure VMs, you can use the Azure extension. This method supports Windows VMs, Linux x64 VMs, and Linux ARM-based VMs.

You'll need to follow these steps in the Azure portal: select the VM, go to Extensions + applications, click + Add, search for the Datadog Agent extension, and click Next. Then, enter your Datadog API key and Datadog site, and click OK.

Domain controllers are not supported when installing the Datadog Agent with the Azure extension.

Alternatively, you can install the Datadog Agent using the AKS Cluster Extension. This method allows you to deploy the Agent natively within Azure AKS, which can be more convenient than using third-party management tools.

To install the Datadog Agent with the AKS Cluster Extension, go to your AKS cluster in the Azure portal, select Extensions + applications, search for and select the Datadog AKS Cluster Extension, and click Create. Then, follow the instructions in the tile using your Datadog credentials and Datadog site.

Here are the steps to install the Datadog Agent using the Azure extension:

- Go to the Azure portal and select the VM.

- Go to Extensions + applications and click + Add.

- Search for and select the Datadog Agent extension.

- Click Next and enter your Datadog API key and Datadog site.

- Click OK to complete the installation.

Adding Transform XML

To add a Transform XML action to your Logic app, you'll need to select Add an action, then type "transform" in the search box to filter the actions. This will bring up the Transform XML action, which you should select.

The next step is to add the XML content that you want to transform. You can use any XML data you receive in the HTTP request as the content. To do this, select the body of the HTTP request that triggered the Logic app.

To complete the transformation, you'll need to select the name of the map you want to use. This map must already be in your Integration Account. If you've already given your Logic App access to your Integration Account, you should be able to find the map you need.

Here are some scenarios where you might want to use the Transform XML action:

- Uploading a single map into an Integration Account.

- Uploading a single map into an Integration Account and remove the file-extension.

- Uploading a single map into an Integration Account and set add a prefix to the name of the schema within the Integration Account.

- Uploading all maps located in a specific folder into an Integration Account.

- Uploading all maps located in a specific folder into an Integration Account and remove the file-extension.

- Uploading all maps located in a specific folder into an Integration Account and set add a prefix to the name of the maps.

Schemas

Schemas are an essential part of integration with Azure, and understanding how to work with them is crucial for a seamless experience.

You can upload or update a single schema or multiple schemas into an Azure Integration Account, which is a great way to manage your schemas in one place.

To upload a single schema, you'll need to specify the ResourceGroupName and Name of the Azure Integration Account, as well as the SchemaFilePath. If you're uploading multiple schemas, you can specify the SchemasFolder instead.

Here's a summary of the required parameters for uploading a single schema:

You can also choose to remove the file extension from the schema name or add a prefix to it before uploading. This can be done by specifying the RemoveFileExtensions or ArtifactsPrefix parameters, respectively.

For example, if you want to upload a schema and remove the file extension, you would set RemoveFileExtensions to true.

A fresh viewpoint: Azure File Integrity Monitoring

Assemblies

Uploading assemblies into an Azure Integration Account is a straightforward process. You'll need to specify the ResourceGroupName, which is the name of the Azure resource group where the Integration Account is located.

To upload a single assembly, you'll need to provide the AssemblyFilePath, which is the full path of the assembly you want to upload. This is a mandatory parameter if you haven't specified the AssembliesFolder.

If you're uploading multiple assemblies, you can specify the AssembliesFolder, which is the path to a directory containing all the assemblies you want to upload. This is a mandatory parameter if you haven't specified the AssemblyFilePath.

You can also add a prefix to the name of the assemblies before uploading them by specifying the ArtifactsPrefix. This is an optional parameter, and it can be useful if you want to organize your assemblies in a specific way.

Here are the parameters you'll need to specify for uploading assemblies:

You can choose to upload a single assembly, all assemblies in a folder, or a combination of both, depending on your needs.

Certificates

Certificates are a crucial part of integrating with Azure, and understanding how to upload and manage them is essential.

You can upload a single public certificate into an Azure Integration Account by specifying the ResourceGroupName, Name, and CertificateType (set to Public). The CertificateFilePath is also required, which is the full path of the certificate.

To upload all public certificates located in a specific folder into an Azure Integration Account, you'll need to specify the ResourceGroupName, Name, and CertificateType (set to Public), along with the CertificatesFolder parameter, which is the path to the directory containing the certificates.

If you want to add a prefix to the name of the certificates within the Integration Account, you'll need to specify the ArtifactsPrefix parameter.

Here are the parameters you'll need to specify for uploading a single public certificate and all public certificates in a folder:

To upload a single private certificate into an Azure Integration Account, you'll need to specify the ResourceGroupName, Name, and CertificateType (set to Private), along with the KeyName, KeyVersion, and KeyVaultId parameters.

On a similar theme: Azure Storage Account Private Endpoint

Partners

Partners are a crucial part of integrating with Azure, and understanding how to upload and manage them is essential.

To upload partners into an Azure Integration Account, you need to specify the ResourceGroupName and Name of the Integration Account.

The ResourceGroupName is the name of the Azure resource group where the Integration Account is located, and the Name is the name of the Integration Account itself.

You can upload a single partner by specifying the PartnerFilePath, which is the full path of the partner to be uploaded.

Alternatively, you can upload all partners located in a specific folder by specifying the PartnersFolder, which is the path to the directory containing all partners.

If you're uploading a single partner, you can also add a prefix to the name of the partner within the Integration Account by specifying the ArtifactsPrefix.

Here's a summary of the parameters you can use when uploading partners:

The partner definition is represented as a JSON object, which can be viewed in the Azure Portal using the provided link.

Agreements

Uploading agreements into an Azure Integration Account is a straightforward process that can be done in a few different ways.

You can upload a single agreement into an Integration Account by specifying the ResourceGroupName, Name, and AgreementFilePath parameters.

The AgreementFilePath parameter is the full path of the agreement that should be uploaded, and it's mandatory if the AgreementsFolder parameter hasn't been specified.

Alternatively, you can upload all agreements located in a specific folder into an Integration Account by specifying the ResourceGroupName, Name, and AgreementsFolder parameters.

The AgreementsFolder parameter is the path to a directory containing all agreements that should be uploaded, and it's mandatory if the AgreementFilePath parameter hasn't been specified.

You can also add a prefix to the name of the agreement within the Integration Account by specifying the ArtifactsPrefix parameter.

Here are the parameters you'll need to specify when uploading agreements:

List

To list integration accounts, you can use the az logic integration-account list command.

You can return a specific number of items in the command's output, but if the total number of items available is more than the value specified, a token is provided in the command's output.

To resume pagination, provide the token value in the --next-token argument of a subsequent command.

The token is the value from a previously truncated response, which allows you to specify where to start paginating.

For another approach, see: Access Token Azure

Frequently Asked Questions

What are Azure integration accounts?

Azure Integration Accounts are a centralized platform for managing B2B and EDI integration scenarios, connecting businesses and facilitating data exchange. They serve as a hub for trading partners, agreements, and other essential artifacts.

How do I create an integration account in Azure portal?

To create an integration account in Azure portal, search for "integration accounts" and select "Create" under the Integration accounts section. You'll then be prompted to choose a name for the Azure resource group to organize related resources.

What is the Azure integration service?

Azure Integration Service connects apps, data, and services across environments, enabling streamlined workflows and automation. It simplifies business operations by facilitating seamless communication and data synchronization.

Sources

- https://learn.microsoft.com/en-us/azure/logic-apps/enterprise-integration/create-integration-account

- https://turbo360.com/blog/connecting-azure-logic-apps-with-integration-account

- https://scripting.arcus-azure.net/Features/powershell/azure-integration-account

- https://learn.microsoft.com/en-us/cli/azure/logic/integration-account

- https://docs.datadoghq.com/integrations/guide/azure-manual-setup/

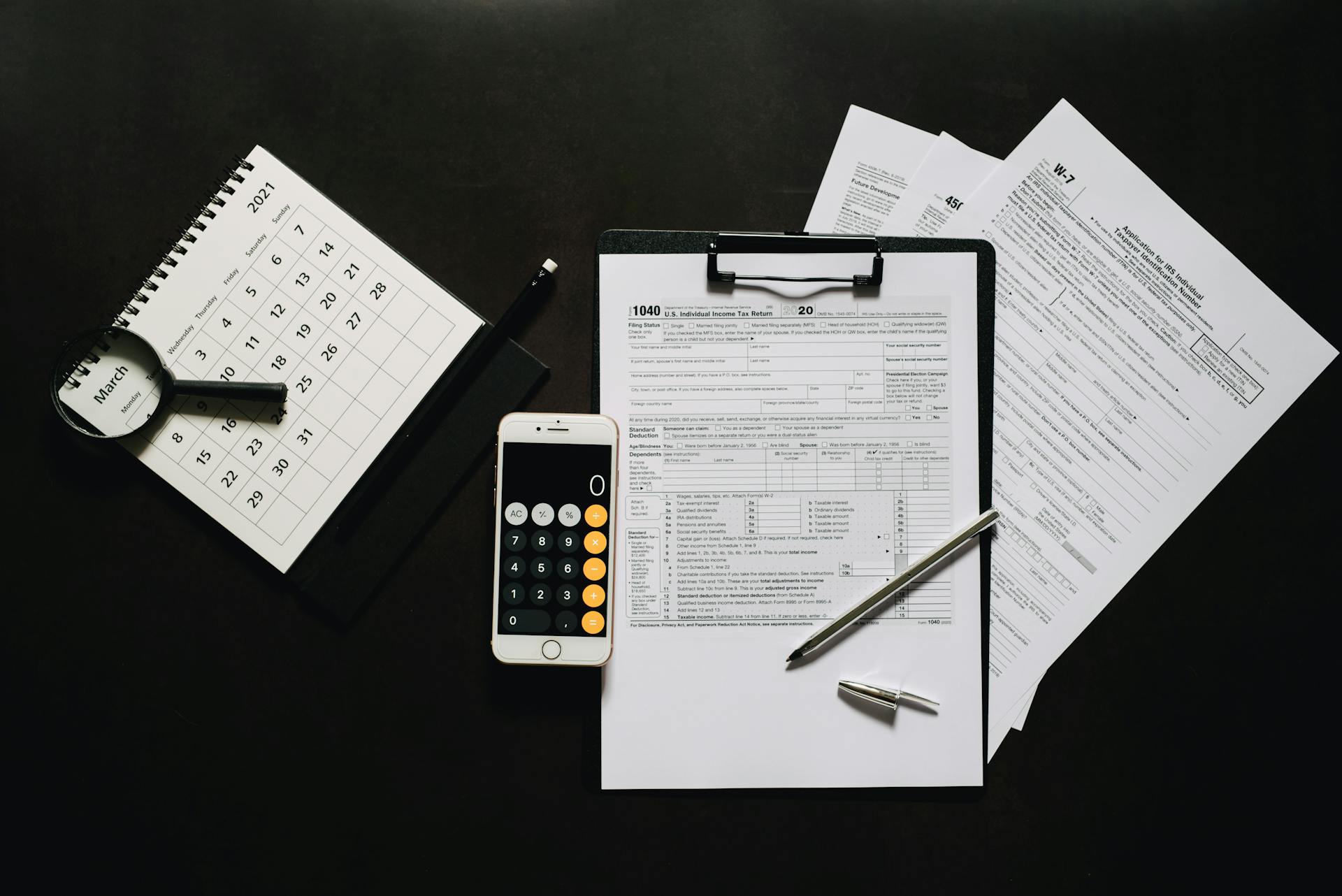

Featured Images: pexels.com