Building and deploying web services in Python is a straightforward process.

You can use the Flask web framework, which is a lightweight and flexible option for creating web services.

Flask is ideal for small to medium-sized applications and has a simple API that makes it easy to create and deploy web services.

One of the key benefits of Flask is its ability to handle requests and return responses quickly, making it a great choice for real-time applications.

Python's built-in http.server module can also be used to create a simple web service.

Requirements and Setup

Before setting up your web service, make sure you have a Python-enabled instance of Machine Learning Server configured to host web services.

To set up gunicorn, a Python WSGI HTTP Server for UNIX, you'll need to create a gunicorn configuration file and specify the location of the logs. You can also provide these arguments in the command line using –error-logfile and –access-logfile.

Here are some key arguments to include in your gunicorn command:

- First argument: location of the gunicorn configuration file

- Second argument: URL and port where you want your servers to listen

- Third argument: name of the python file that contains the wsgi application code

- Fourth argument: number of processes that gunicorn should launch

- Fifth argument: name to assign to those processes

Requirements

To use the web service management functions in the azureml-model-management-sdk Python package, you'll need to meet some requirements.

First and foremost, you'll need access to a Python-enabled instance of Machine Learning Server that's properly configured to host web services.

You'll also need to authenticate with Machine Learning Server in Python, which is described in a separate section called "Connecting to Machine Learning Server in Python."

To summarize, you'll need these two things to get started: a Python-enabled Machine Learning Server instance and proper authentication.

Configuring

Configuring WSGI involves creating a configuration file, such as attngan-wsgi-config.conf, where you specify the settings for your WSGI application.

You can choose between two modes: "embedded mode" and "daemon mode". Daemon mode is preferred as it allows for automatic updates without restarting the server.

The WSGIDaemonProcess directive sets up daemon mode, with the first argument being the path to your python server file and the second option adding your site-packages folder to the WSGI python path.

Worth a look: Python save Html to File

This is necessary because many Python packages are installed in .local/lib, which is not on the system python path, and you may get module not found errors without it.

The WSGIPythonHome directive should point to your base Python installation, which can be found by running the command import sys; print(sys.prefix).

The WSGIScriptAlias directive sets the mapping between the URI mount point of your Python server and the absolute path name for the WSGI application file.

Restrictions apply to where these directives can live, such as the WSGIScriptAlias directive cannot be used within the Location, Directory or Files container directives.

In my setup, I placed the configuration file directly at the bottom of the httpd.conf file, but you can split them up as you wish.

Note that there is no requirement that the WSGI directives be in one file, so you can organize them as you see fit.

Discover more: Read Html File Python

Setting Up

To set up your environment, you'll need to have access to a Python-enabled instance of Machine Learning Server, which must be properly configured to host web services. This is a requirement for using the azureml-model-management-sdk Python package.

You'll also need to authenticate with Machine Learning Server in Python as described in "Connecting to Machine Learning Server in Python." This will give you the necessary credentials to access the web service management functions.

To configure WSGI, you'll need to create a configuration file. This file should contain the WSGIDaemonProcess directive, which sets up the daemon mode for hosting the WSGI application. The first argument is the path to your Python server file, and the second argument (python-path) adds your site-packages folder to the WSGI Python path.

The WSGIPythonHome directive should point to your base Python installation, which you can find by running `import sys; print(sys.prefix)`. This is necessary to avoid module not found errors.

You can also use gunicorn to set up your WSGI application. To do this, you'll need to launch gunicorn with the following command: `$/home/bitnami/.local/bin/gunicorn -c ../conf/gunicorn.conf -b 127.0.0.1:5000 wsgi --workers=1 --name attnGAN`.

Creating a WSGI Application

Creating a WSGI Application is a crucial step in building a Python web service. A WSGI application is essentially a Python file that defines the application object, which serves as the entry point of your Python application.

The content of the WSGI application file is where you define this application object. A good place to start is with a basic file like the wsgi (attn_gan.wsgi) file shown in the article, which obtains the Python version and prefix and returns that information after wrapping it into a HTTP header.

This file is used to serve as the entry point of your Python application, and it's a good starting point for your WSGI application. You can verify the output of this file by running it in a browser and checking the output against the output of running a similar command in a Python shell.

There are two main ways to set up mod_wsgi, the Apache module that implements the WSGI specification. You can either install it into an existing Apache installation or into your Python installation or virtual environment.

Here are the two main ways to set up mod_wsgi:

If you're having trouble with your WSGI application, a good place to check is the Apache error log, located in the apache2/logs directory in the error_log file. This is where you can find any WSGI/Python related errors that may be occurring.

Deploying the Application

Deploying the Application is a straightforward process, and you have two main options. You can build and deploy your web service using the code in your GitHub/GitLab/Bitbucket repo.

This approach allows you to customize your application to meet your specific needs. By using your own code, you have complete control over the development and deployment process. You can make changes and updates as needed, and deploy them quickly.

Alternatively, you can pull a prebuilt Docker image from a container registry. This option is ideal if you want to deploy a web service quickly and easily, without having to build it from scratch.

Installing Mod_Wsgi

Building mod_wsgi from source is a straightforward process. Follow the directions provided to ensure compatibility.

You'll need to configure your environment, which involves specifying the location of the Apache apxs file and the Python interpreter. For a Bitnami installation, the location of the apxs file will be different, so your configure command will look like this:

./configure--with-apxs=/opt/bitnami/apache2/bin/apxs--with-python=/usr/bin/python3.6

After configuring, run make to generate the mod_wsgi.so file. Then, copy the file from the specified location to the Apache modules directory: opt/bitnami/apache2/modules/.

To load mod_wsgi, add the following line to your Apache httpd.conf file:

LoadModule wsgi_module modules/mod_wsgi.so

Restarting Apache will load mod_wsgi successfully.

Before running this, ensure Apache has the right permissions to access the folder where your server files are located. Changing the group of your server directory and its contents to the group Apache runs as (daemon in my case) using chgrp is a good starting point. Then, set the group permissions using the chmod –reference option, copying the permissions from another folder that Apache has access to.

Additional reading: Python Access Azure Blob Storage

Deploy Your Own Code

You can build and deploy your web service using the code in your GitHub/GitLab/Bitbucket repo, or you can pull a prebuilt Docker image from a container registry.

To get started, you'll need to access your code repository. This could be GitHub, GitLab, or Bitbucket, depending on where you've stored your project.

You can then use the code in your repository to build and deploy your web service. This is a great option if you want to customize your application or make changes to the code.

One thing to note is that using a prebuilt Docker image can be a convenient option, but it may limit your ability to make changes to the code.

Port Binding

Port binding is a crucial step in deploying your application on Render. Every Render web service must bind to a port on host 0.0.0.0 to serve HTTP requests.

The default value of the PORT environment variable is 10000, but you can override this value by setting the environment variable for your service in the Render Dashboard.

Render forwards inbound requests to your web service at the port defined by the PORT environment variable. It's essential to bind your HTTP server to this port to ensure successful deployment.

If you bind your HTTP server to a different port, Render is usually able to detect and use it. However, if Render fails to detect a bound port, your web service's deploy will fail and display an error in your logs.

There are some reserved ports that you cannot use, including 18012, 18013, and 19099. Make sure to avoid these ports when binding your HTTP server.

Always bind your public HTTP server to the value of the PORT environment variable, even if you bind your service to multiple ports for private network traffic.

Sources

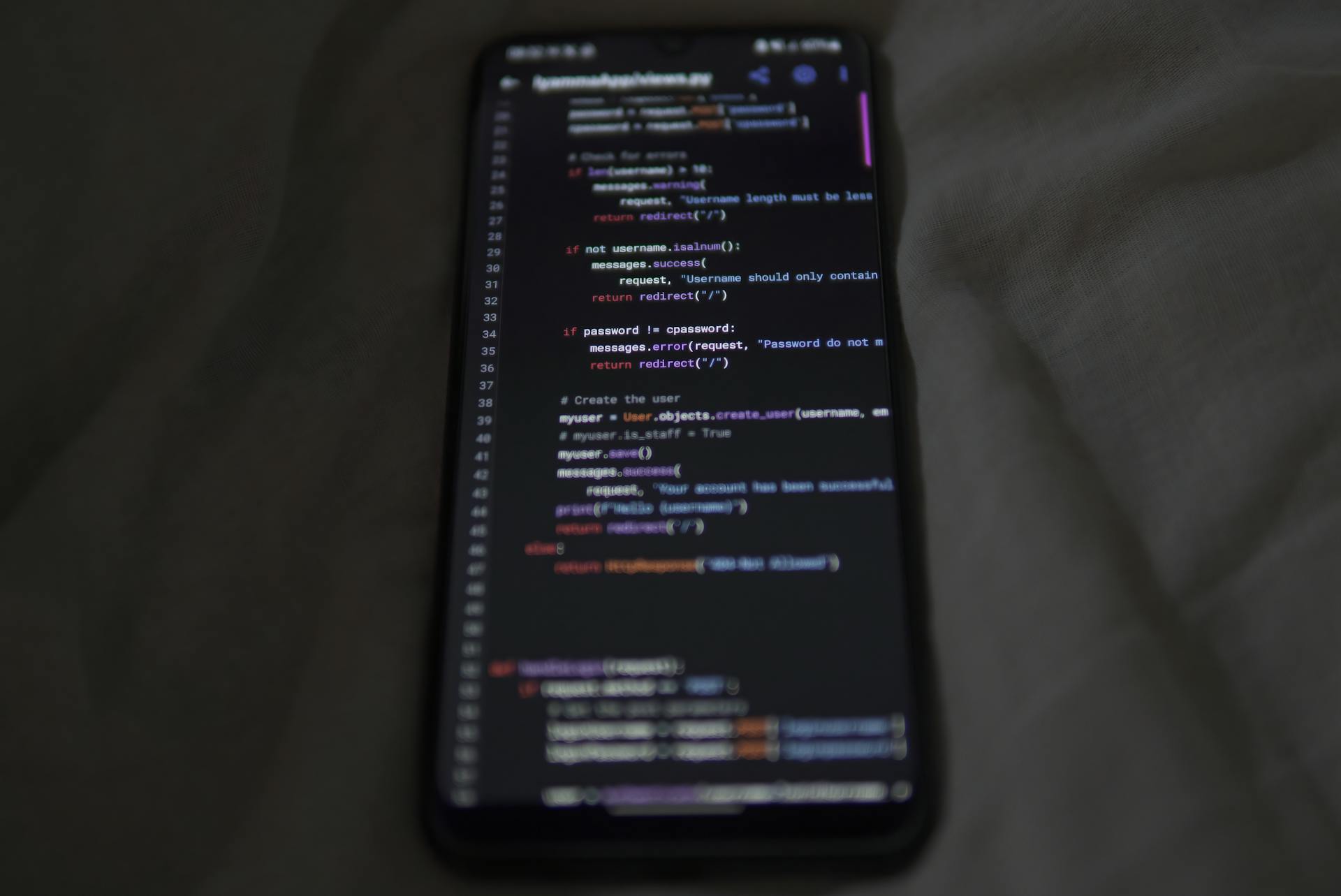

Featured Images: pexels.com